How Copilot Works to Protect Your Data

To understand this, you need to be aware that not all large language models are entirely safe. If you look closely at the terms of service and legal documentation when using tools like ChatGPT, there’s a big grey area regarding what will be done with your data in future iterations of their models.

The big worry is that data you enter into a large language model will not be private in future generations, as the model itself is built on the data that’s inputted from past interactions. This grey area has stopped many businesses from fully embracing ChatGPT, with some even banning the use of the tool or similar ones in their organization.

This is where Copilot differs – the data you enter and the data it has access to is enclosed in your Microsoft 365 tenant, your business IT infrastructure.

In Microsoft’s own words: “You are in control of your data. Microsoft doesn’t share your data with a third party unless you’ve granted permission to do so. Further, we don’t use your customer data to train Copilot or its AI features, unless you provide consent for us to do so. Copilot adheres to existing data permissions and policies, and its responses are based only on data that you personally can access.”

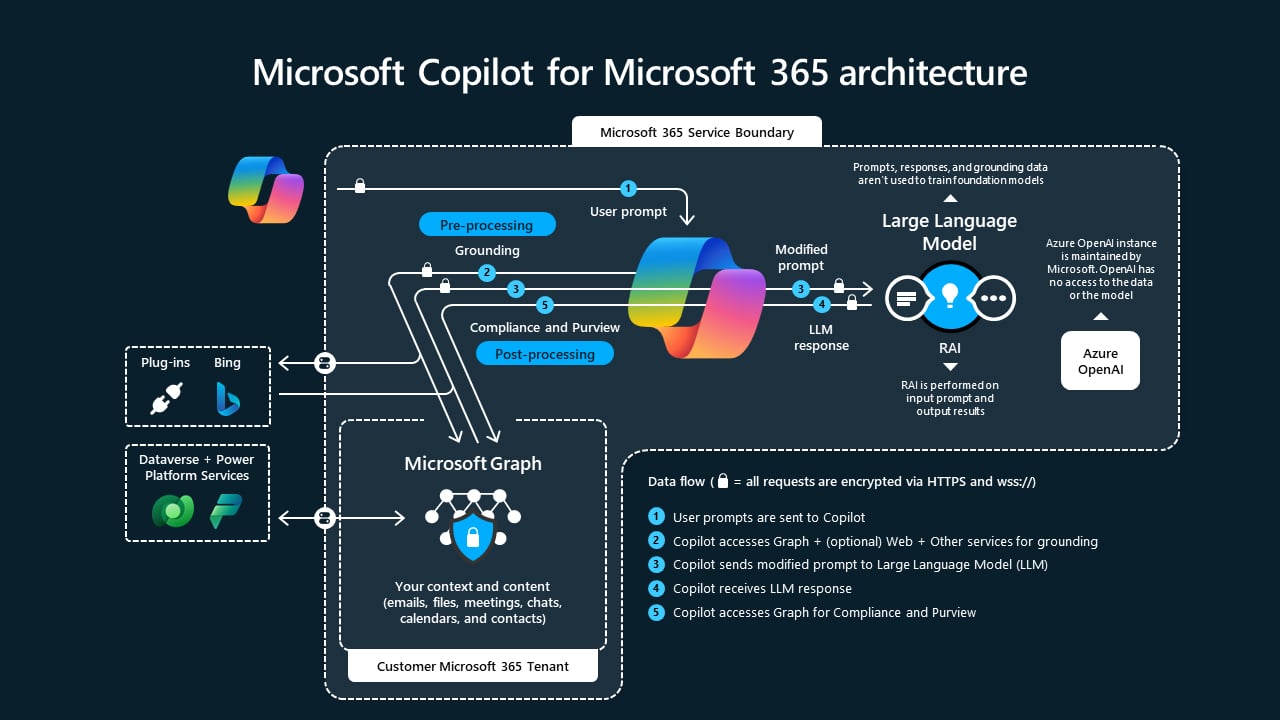

Microsoft Copilot Architecture

Here’s a simpler explanation of how Microsoft Copilot for Microsoft 365 works:

2. Pre-Processing: Copilot refines the prompt using a method called grounding, which makes the prompt more specific. It includes relevant text from files or other content the user has access to, and sends this refined prompt to the language model (LLM) for processing.

3. Post-Processing: Copilot processes the LLM’s response by checking with Microsoft Graph, ensuring responsible AI use, and reviewing security, compliance, and privacy. It also generates commands as needed.

4. User Review: The final response is sent back to the app for the user to review and assess.

The prompt and the response are called the “content of interactions,” and these interactions are recorded in the user’s Copilot interaction history.

Why This Matters for Your Business

Microsoft Copilot for Microsoft 365 iteratively processes these steps to provide results that are relevant to your organization by using your specific organizational data. This means you can trust that your sensitive information remains secure and is only used within the confines of your Microsoft 365 environment. By using Copilot, you can leverage powerful AI tools to enhance productivity and efficiency without compromising on data security. This level of control and privacy is crucial for small and medium-sized businesses that need to protect their data while still benefiting from advanced AI capabilities.

Understanding how Microsoft Copilot for Microsoft 365 works and our commitment to data security helps alleviate concerns about privacy and data protection. By keeping your data within your Microsoft 365 tenant and following strict data permissions and policies, Copilot ensures your business data and information remain secure. This will allow you and your IT provider to integrate AI into your business operations safely and securely.